Find Duplicate Tags Using Google Webmaster Tools

Sometimes this is easy, sometimes not so much. The first and foremost rule of SEO is to make sure each of your unique pages has a unique title tag. That’s because the first and foremost rule of Google (or at least sometimes it seems that way) is to avoid the dreaded “duplicate content” monster.

Now the purpose of this SEO tip is not to show you what a title tag is, or how to manipulate it. I’ll leave that to other pages that are already online. (At the bottom of this post is a link to one that you might find of interest.)

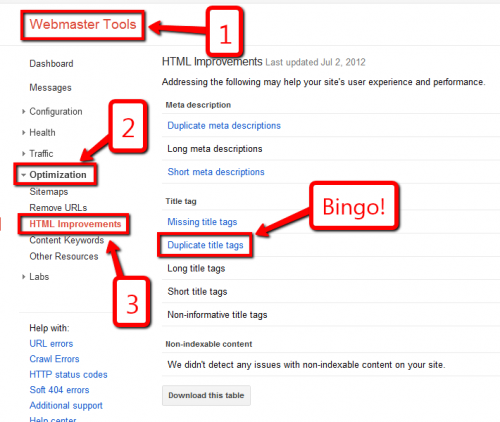

Instead of covering the basics of title tags right here, I want to point you to an easy way to determine where to look for duplicate tags in your site. The best tool I know of, and it’s free, is of course Google Webmaster Tools. If you haven’t already created an account with Google, or tied your Google account into, Webmaster Tools, you’ll need to get that done first (learn more about Google Webmaster Tools here). Then if you visit Google Webmaster Tools, the following illustration will make sense to you:

OK, so we’re done. Right?

Eliminate “Duplicate” Content with Robots.txt and Wild Cards

Not so fast, hombre. (Or mujer, as the case may be.) Depending on the type of platform you’re working on, you might have a challenge on your hands. For example, one of my sites is an ecommerce website built on top of a Magento platform. I noticed that the duplicate title tags for this site numbered in the thousands and thousands, yet I had carefully optimized the website title tags.

After a bit of searching I found that the primary problem related to the way Magento was allowing Google to find many pages using different URL’s. For example, the following two URL’s take a shopper to the exact same item:

Google will view these addresses, not as one page with duplicate addresses, but separate pages with duplicate content. The mighty G doesn’t like that. It’s so untidy.

So what do we do about that? Allow me to introduce my friend, robots.txt. Allow me to introduce my other friend, wild cards. By using a robots.txt file (which Google allows you to create in Webmaster Tools) and some creative use of wild cards, we can make sure that Google only looks at this jersey in one of the two places that Magento would normally allow it to.

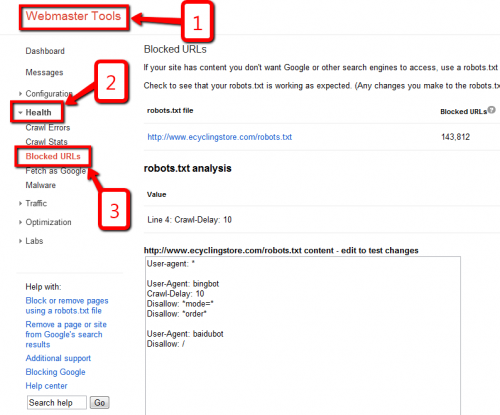

Here’s how to get to the robots.txt tab in the dashboard.

A robots.txt file basically tells Google what to ignore. So, let’s use robots.txt to tell Google to ignore any content that appears with a category of “mens-s-jerseys” (you can see that in the first url up above). The syntax for that would look like this:

Disallow: /*men-s-jerseys*

Now that you have the principle in hand, slow down. If you put a disallow line in your robots.txt file, and if you get it wrong, you could block Google from accessing your entire site. Yikes!

But I didn’t say stop, just slow down. Fortunately Google builds a handy little test mechanism into Webmaster tools. Here’s how you use it:

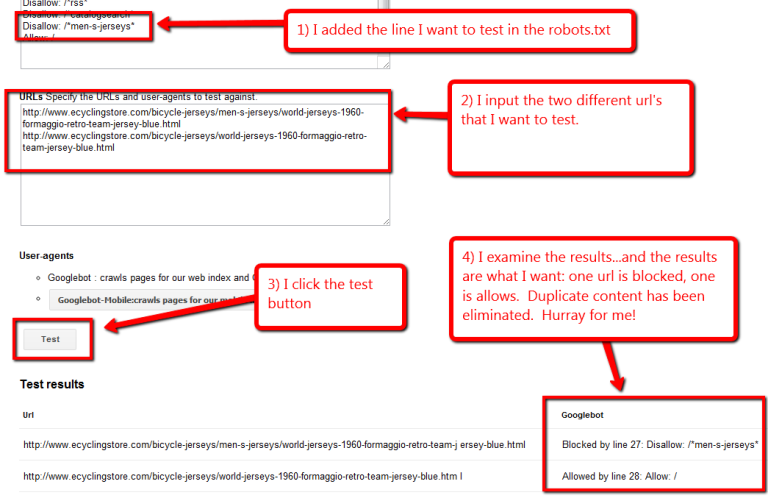

- I add the line I want to test in the robots.txt

- I input the two different url’s that I want to test.

- I click the test button

- I examine the results…and the results are what I want: one url is blocked, one is allowed. Duplicate content has been eliminated. Hurray for me!

See the screenshot below to see what that 1-4 process looks like on screen.

Well, I have no illusions that this process is a bit on the advanced side of SEO. But it’s certainly not beyond your reach, you starry-eyed dreamer. Just use the test tool, ok? Otherwise I’ll worry.

Footnote: Want the basics on title tags? I did a quick Google search (handy tool, this Google thang). I found this fairly useful beginners introduction to title tags (and a few other tags as well). In the near future I’ll also post a video tutorial on updating title tags in WordPress, the platform I prefer to work with for the websites I manage.

How do I solve the problem of UCase and Lcase parameters as part of URL to avoid duplicate title tagas. Example of the problem:

/broker-check.asp?BrokerID=302

/broker-check.asp?brokerid=302

Thanks.

To a certain extend it’s going to depend on the application that is creating those parameters. However, before digging too much into that I’d recommend leaving it for a couple of weeks and then checking it again. I’m finding lately that Google is getting pretty good at correcting those types of errors on its own after it recognizes what’s going on. If it persists, then we have a few options. One would be to find out if we can correct the systemic problem at the root of that (it sounds like poor programming).

thanks sir my duplicate issue is solved

You could use canonical URL to eliminate issue of multiple URLs for the same content. Magento has built in feature.

I have duplicate content showing. on a dynamic website (tomoatocart). can’t get canonical working.

example:

/137-romantic-house-plans?sid=2f94e48317091d9114bdac052b51ec23

/137-romantic-house-plans?sid=5a76bce5be2d19a513df5c791d578bcc

/137-romantic-house-plans?sid=5d674282e8d99b40b2970af73c5b096f

/137-romantic-house-plans?sid=cf1341f23d5db4c359fab1d0a96a7031

If I block url this.

Disallow: /*/137-romantic-house-plans*

will google still see this page?

/137-romantic-house-plans

I would not disallow the page. It’s showing duplicate content because of a URL parameter, namely sid=. Usually Google will figure out these parameters if you give them a bit of time and will understand that it’s not duplicate content. To confirm whether they have figured out the sid parameter on your site (which simply stands for “session id”), go into Webmaster Tools, click on “Crawl” on the left and then on “URL Parameters.” If you see sid showing up in the list of parameters then you probably won’t have a problem.

Hi Ross,

I came across your site when I am trying to solve my Duplicate title tags.

Here’s an example:

How To Find High PageRank Sites Using ScrapeBox?

/find-high-pagerank-sites-using-scrapebox/

/find-high-pagerank-sites-using-scrapebox

You see, the difference is the ‘/’ and honestly, I have no idea where to start! Can you suggest me a solution to work on this? I am using Yoast SEO. I am thinking of using link canonical but is that safe? I found this over the net:

>> The quickest way to get around this is to edit your index.html page markup and include a canonical link within the of your page.

Try this:

<<

What do you think?

Thanks.

~Reginald

Reginald, I was cruising through all my comment spam (all the ones that made it through Akismet), and I “discovered” this unpublished comment. If you are still out there…I do think canonical tags are perfectly safe. Yoast will incorporate those into your head element. If you need more info let me know. Otherwise, embarrassed apologies for missing your comment way last December.